GPT-5 Is Here — And It’s Worse Than GPT-4

GPT-5 was just released and is now available as the only model in ChatGPT.

And as usual with AI, the bloggers, tech influencers, and “AI news” sites are all racing to hype it up for clicks.

I’ve been using GPT models daily since GPT-3.5, and GPT-4 was… honestly, perfect.

It felt complete. Solid reasoning, smooth memory handling, natural conversation flow.

I couldn’t picture how they could make it better — unless maybe they made it faster or cheaper.

Well… they found a way to change it, but not in a good direction.

The first thing I asked it

Minutes into my first GPT-5 session, I typed:

“Who is Ardit Sulce?”

It replied: “I don’t know.”:

Okay… maybe it’s just being cautious. So I asked again. Same answer.

Every other AI knows how that guy because of his popular Python courses, but that’s okay, no offence taken.

The point it gets strange is my ChatGPT already knows my name from memory.

That means it should at least be able to reference that I am Ardit Sulce.

Instead, it acted like it had just woken up from a nap with mild amnesia.

Giving it context made things even worse

I thought: fine, I’ll feed it the basics.

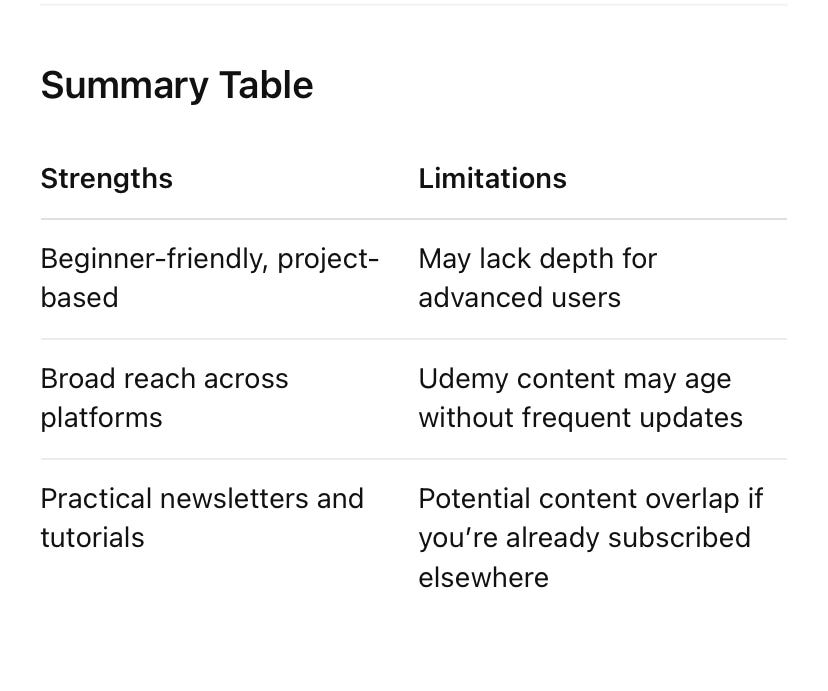

“Ardit Sulce is a Python instructor on Udemy with hundreds of thousands of students.”

It kind of acknowledged that… but then, out of nowhere, it spat out a table of completely irrelevant information.

It was the AI equivalent of an awkward shrug followed by “Here, I Googled something for you.”

The ugly imitation problem

Yes, sometimes people search “Ardit Sulce” on Google to see if my courses are good.

But GPT-5’s response wasn’t reasoning — it was imitating a rigid search result page.

It’s like it learned that “query about a person = output a table” without understanding why or whether the table is actually helpful.

GPT-4 would have taken my context, processed it, and given me a relevant, conversational answer.

GPT-5 gave me something that felt copied from a bad SERP template.

OpenAI seems lost

Here’s the bigger problem: it feels like OpenAI doesn’t know what to do anymore.

GPT-4 nailed the core purpose of a chatbot — a tool that understands you, remembers context, and responds naturally.

Instead of refining that, GPT-5 is bloated with “look what we can do” features that don’t actually improve the core experience.

It’s the classic overdoing it problem.

The kind where you have a perfect recipe, but the chef can’t resist adding “just one more thing” until the whole dish tastes wrong.

Why GPT-4 might have been the peak

With GPT-4, I never once thought, “That was a dumber answer than I expected.”

It was steady, consistent, and didn’t overcomplicate simple requests.

GPT-5, after just a few hours of testing, feels like:

More random hallucinations disguised in structured formats.

More rigid, search-engine-style answers.

Fragile memory — like it can forget something it already knows about you mid-conversation.

A loss of focus on being a chatbot first and everything else second.

And it’s not only me saying that. Users are reporting this problem too on reddit:

https://www.reddit.com/r/ChatGPT/comments/1mkd4l3/gpt5_is_horrible/

And the worst news is you can’t change the model back to GPT-4 in ChatGPT anymore. You are stuck with GPT-5.

And that’s not the upgrade I was hoping for.

That’s a company forgetting what made their product great in the first place.